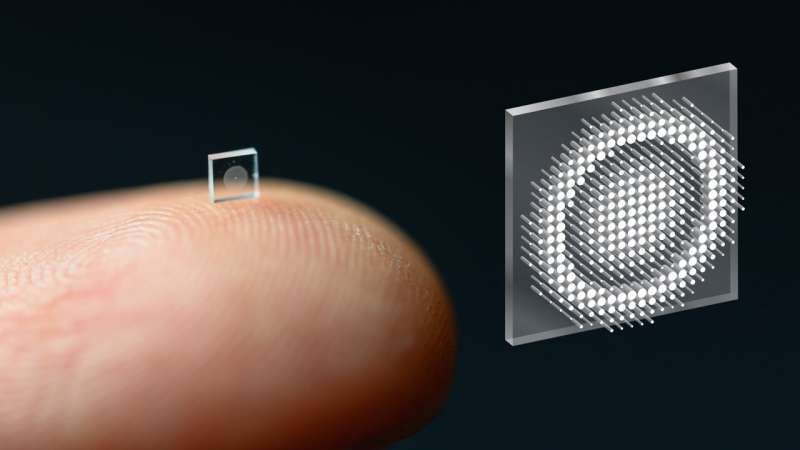

Meta-optics—2-D arrays of subwavelength scatterers—have recently emerged as a promising candidate for drastically miniaturizing image sensors.1 Like any diffractive optics, however, meta-optical lenses suffer from strong chromatic aberrations. As such, full-color images captured by a single meta-optic have remained poor, limiting their applications in imaging. We recently demonstrated that combining computer learning algorithms with meta-optics can dramatically miniaturize image sensors without sacrificing their performance.

Specialized scatterer designs that can compensate for the chromatic dispersion have been used for broadband focusing, but these lenses are fundamentally limited to very small physical apertures and low numerical aperture.2 These limits can potentially be overcome by leveraging a computational-imaging paradigm, in which the meta-optics themselves are not directly producing an image but instead function synergistically with post-processing computation to recover a high-quality image.

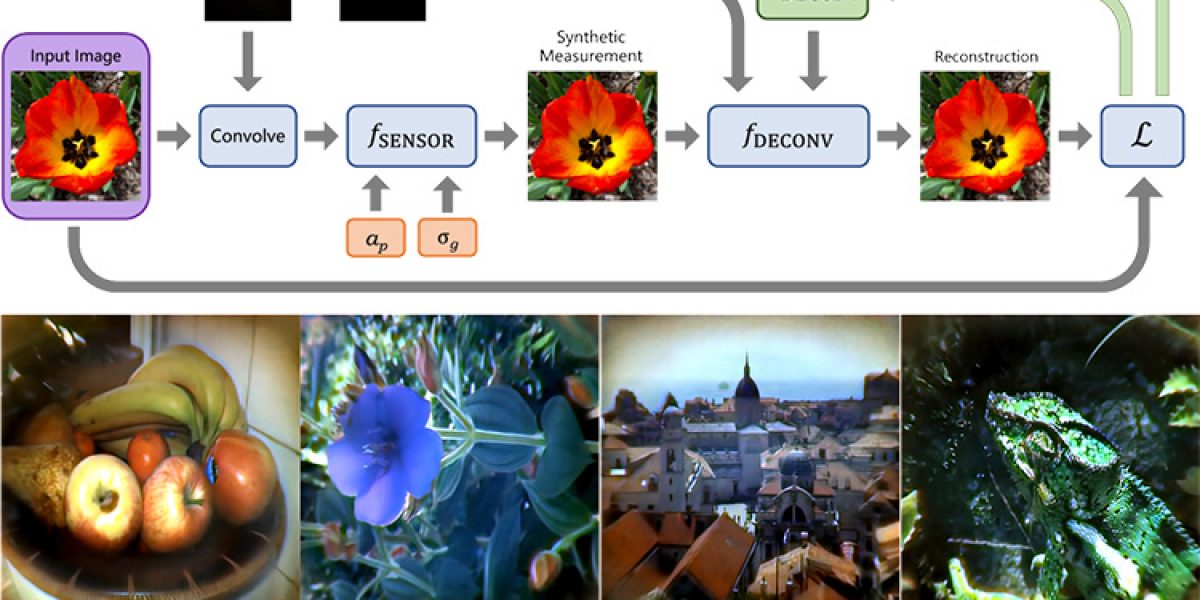

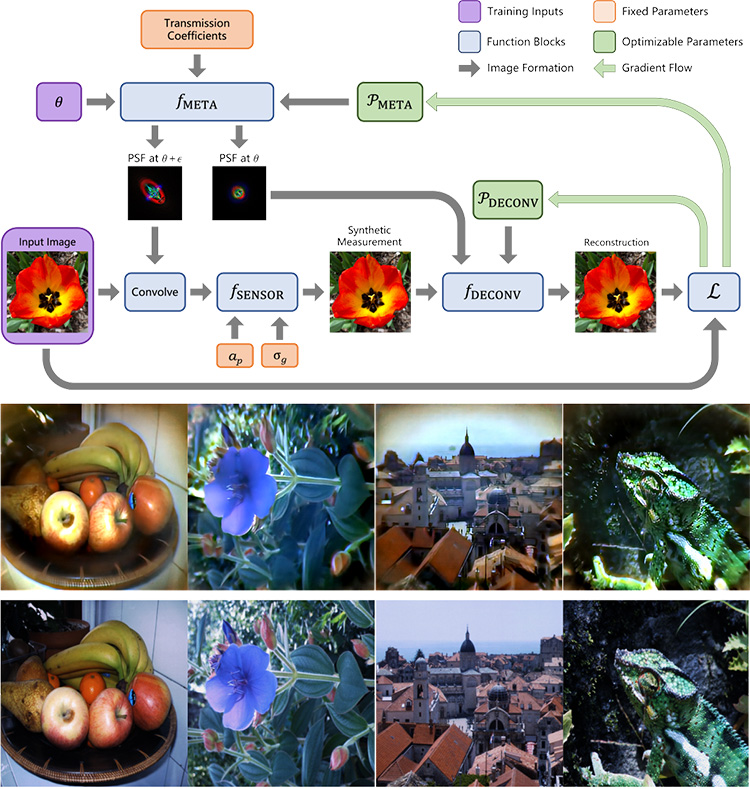

By designing a meta-optic to blur all colors in an identical manner at the sensor plane, we can calibrate this blur, and then apply a low-latency deconvolution routine to extract a full-color image from the raw sensor data.3 Unfortunately, the image quality achieved with this approach has remained poor compared to conventional imaging systems, hampered by deblurring and noise artifacts that limited reconstruction quality.

Instead, by relying on a fully differentiable meta-optic model and reconstruction algorithm, we jointly designed the structural parameters of the meta-optic as well as the settings of a computational reconstruction network, driven by an image quality loss at the end of the imaging pipeline. This made it possible for us to demonstrate high-quality imaging comparable to a compound optic with six refractive lenses.4 In this demonstration, we achieved a reduction of five orders of magnitude in camera volume, while maintaining high quality in terms of color and spatial content.

We believe that these full-color cameras will find application in thin, compact sensors for mobile photography or for video conferencing, as well as for large satellite-based imaging systems. Our work marks the rejuvenation of research on software-defined optics, accelerating the co-design and co-integration of hardware and software for free-space optics.5

Researchers

Shane Colburn and Arka Majumdar, Tunoptix and University of Washington, Seattle, WA, USA

Felix Heide and Ethan Tseng, Princeton University, Princeton, NJ, USA

References

1. M. Kamali Seyedeh et al. J. Nanophoton. 7, 1041 (2018).

2. F. Presutti et al. Optica 7, 624 (2020).

3. S. Colburn et al. Sci. Adv. 4, (2018).

4. E. Tseng et al. Nat. Commun., doi:10.1038/s41467-021-26443-0 (2021).

5. D.G. Stork et al. Appl. Opt. 47, B64 (2008).